Computer gaming offers a unique test-bed and market for advanced concepts in computer graphics, human computer interaction (HCI), computer-supported collaborative work (CSCW), intelligent agents, and perception. In addition, games provide an engaging way to discover and explore research issues in the relatively young fields of wearable computing and augmented reality (AR). This paper presents WARPING, a developing architecture for augmented reality, and describes two gaming systems implemented in the architecture. Users interact with the mobile and stationary platforms through gestures, voice, head movement, location, and physical objects. Computer vision techniques are used extensively in an attempt to untether the user from the equipment normally associated with virtual environments. In this manner, we hope to encourage more seamless interactions between the user's virtual and physical environments and work towards gaming in less structured environments.

Computer gaming provides a unique prototyping arena for human-computer interactions. Due to the entertaining nature of the gaming interactions, users are willing to explore innovative metaphors, modalities, and hardware even when they are not as apparent or fluid as the designer might have hoped. In addition, there is a certain universality of a sense of play that entices users who would not be interested in testing prototype systems normally. In fact, sometimes researchers have to limit access to their new systems for fear of premature release.

Another advantage is that game play can be designed to hide limitations in the current implementation of a system while exploring its potential. In the past, entertainment platforms have been used for explorations in computer supported collaborative work (CSCW) [Evard93], artificial intelligence [Agre87], agents [Foner97], on-line communities and education [Bruckman97], perception [Wren95,Wren99], and synthetic character development [Johnson99,Kline99], to name a few. Currently, computer gaming provides an excellent framework for working in wearable computing and augmented reality. In the constructs of a game, CSCW, HCI, graphics, autonomous agents, mobile sensing and pattern recognition, wireless networking, and distributed databases can be explored in a community of wearable computer users. As infrastructure needs are determined and the infrastructure improved, new metaphors and uses can be introduced. With time, these improvements can be directed towards development of longer term, everyday-use wearable computing.

While the development of optimized three-dimensional graphics engines has dominated industry interest in the past, one of the major costs of creating current generation computer games is the development of content. Developing an entire 3D world for the player to explore is time consuming and labor intensive. In fact, the complexity of world building required to create a compelling experience is directly dependant on the dimensions of movement allowed to a player's character.

With augmented reality, the virtual world is composited with the physical [Starner97], resulting in obvious restrictions to the simulation, but not without significant benefits. First, the game designer already has a pre-made environment for his game, that of the user's physical surroundings. Thus, the designer can concentrate on creating just the virtual artifacts that deviate from the physical world in the game. For example, an augmented reality game may be as simple as a scavenger hunt of virtual flags hidden in the physical environment. More compelling effects may emulate the abilities of the protagonists in myths and modern stories in science fiction and fantasy. For example, the augmented reality hardware may seem to augment the senses of the player, giving him the ability to see through physical walls to virtual objects in the game, hear a whisper at great distances, see other players' "good" or "evil" auras to avoid deception, follow lingering ``scents'', or detect "dangerous" hidden traps. In addition, the system may associate graphics with the player's physical body, allowing him to shoot fireballs from his hands, envelop himself in a force field, or leave a trail of glowing footprints.

Wearable computers are designed to assist the user while he performs another, primary task. In the case of augmented reality gaming, wearable computers composite appropriate artifacts into the user's visual and auditory environments while he is interacting with the physical world. Typically, either monocular or see-through displays are used to create a graphical visual overlay while, for auditory overlay, headphones are mounted in front of the ears in a manner that does not block sound from the physical environment.

However, to perform these overlays, the wearable often requires a sense of the user's location and current head rotation. This information can be obtained using various methods: GPS, RFID, infrared, inertial sensors, and cameras mounted on the head-mounted display. The visual method is particularly interesting due to the additional information it can provide. By mounting cameras near the user's eyes and microphones near the user's ears, the wearable computer can "see" as the user sees and "hear" as the user hears. By recognizing the user's current context, the wearable adapts both its behavior and its input and output modalities appropriately to a given situation. This technique grounds user interface design in perception and may encourage more seemingly intelligent interfaces as the computer begins to understand its user from his everyday behavior.

Position is often the first piece of information desired by the creators of augmented realities - and for good reason. In an augmented reality game, if the positions of the users and the objects that the users might interact with are known through time as with active RFID transmitters [Want95], many inferences can be made about the "where, when, who, and what" of the players' interactions. In addition, physical constraints such as gravity, inertia, and the limits of travel time from one position to another can help sensing accuracy. These known constraints on the physical world can also help game designers determine logical ranges of effects for players' actions. For example, a virtual monster conjured by a player in one room should not be able to see or be seen by players not in the same region.

Wearable computers also allow the observation of the player. Body posture, hand gestures, lip motion, voice, and biosignals might be used as input to the game. For example, a player taking on the role of a wizard may use hand gestures and recite particular incantations which, when recognized by the computer, causes a spell to be cast. In order to create a particular mood, the system may measure a player's heart beat and respiration and play amplified heart beat and respiration sounds in time to, or a little faster than, the player's own.

Telecommunications can provide another advantage to using wearable computers for gaming. When face-to-face, players can collaborate using a mixture of real and virtual objects. Instead of using a blackboard one player might gesture with his finger, drawing a trail of symbols and graphs for the other players to read or manipulate [Starner97]. Similarly, players could collaborate in assembling virtual artifacts. These artifacts may provide clues on how to proceed in the game. Players might also collaborate across great distances using their wearables. For example, telepathy could be simulated in a game by allowing players to type short notes to each other using their wearable computers.

In some games, especially role-playing games (see below), a game moderator (GM) may be advantageous. A GM enforces the game's rules and may introduce new factors or players to see that the game remains balanced and enjoyable.

Wearable computers can assist the GM by concentrating the information about the active players, areas, and objects in the game on one map. The GM could use such a map on his wearable computer to monitor plot development while he tended to situations that required his physical presence.

In addition, this concentration of game information on one computer allows for more complex variants of the situations described above. For example, instead of having a model of telepathy where the sender controls exactly who receives his message, the message might be sent to every telepath within a certain distance of the sender's location. In practice, when a "telepath" sends a "mental" message, the message first goes through the GM's computer which maintains the location of each active player. The GM's computer then determines which telepathic players were close enough and strong enough to receive the sender's message and delivers the message to each of these players' computers. Similarly, "empaths" might receive appropriate messages when a wearable computer of a nearby player senses an increase of heart or respiration rate in its user [Healey98]. If the GM's computer keeps a time log of players' positions, the GM could simulate a highly contagious "disease" introduced by a given player. Whenever a participant in the game interacts with an infected player, the GM's computer would inform them that they are infected after the appropriate incubation period [Colella98]. Furthermore, a GM's log of events during the course of a game could allow "clairvoyant" skills in certain players, where they could see the history of a physical place or person. Finally, central communication hubs would allow groups of players in a game to simulate a "hive mind," where each member of the group is informed of the other member's actions at all times [Starner97]. Such tight collaboration may prove a significant advantage in distributed AR games.

Commonly, computer games are classified as puzzles, strategy, simulations, action-adventures, sports, role-playing, fighters, shooters, and a relatively uncommon class we will refer to as ``agent controller.'' Each type has different characteristics that can be exploited by wearable computers or augmented reality.

The Wearable Augmented Reality for Personal, Intelligent, and Networked Gaming (WARPING) system combines several advanced perception and interaction techniques into a foundation for creating augmented reality games. Currently, WARPING has three available platforms: an augmented desktop, a high-end visual/audio mobile headset equipped with two cameras and a microphone, and an inexpensive location-aware audio system based on a commercial MP3 player and radio frequency beacons.

WARPING's augmented desktop extends the utility of the Responsive Workbench [Kruger95], a project based on the Immersive Workbench by Fakespace. The Perceptive Workbench can reconstruct 3D virtual representations of previously unseen real-world objects placed on the workbench's surface. In addition, the Perceptive Workbench identifies and tracks such objects as they are manipulated on the desk's surface and allows the user to interact with the augmented environment through 2D and 3D gestures. These gestures can be made on the plane of the desk's surface or in the 3D space above the desk. Taking its cue from the user's actions, the Perceptive Workbench switches between these modes automatically, and all interaction is done through computer vision, freeing the user from the wires of traditional sensing techniques. A more extensive treatment of the Perceptive Workbench with comparisons to the current literature can be found in Leibe et al. [Leibe2000].

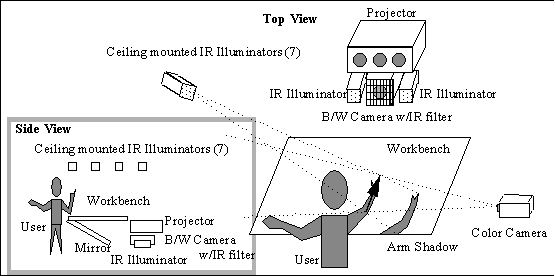

Figure 1: Apparatus for the Perceptive Workbench

The Perceptive Workbench consists of a large wooden desk, a large frosted glass surface for display, an equally large mirror, and a projector (see Figure 1). Images are displayed on the workbench by an Electrohome Marquee 8500 projection system by reflecting the inverted image onto a mirror mounted diagonally under the desk, thus correctly displaying the projection to a viewer opposite the side of projection and above the frosted glass surface. The unit may display stereoscopic images through the use of shutter glasses. In the past, the workbench employed wired pinch gloves and electromagnetic trackers for user input. However, the Perceptive Workbench attempts to replace that functionality through computer vision.

As a basic building block for our interaction framework, we want to enable the user to manipulate virtual environments by placing objects on the desk surface. The system should recognize these objects and track their positions and orientations while they are being moved over the table. Extending a technique initially designed by the first author for use in Ullmer and Ishii's MetaDesk [Ullmer97], we have adapted the workbench to use near-infrared (IR) light and computer vision to identify and track objects placed on the desk's surface. A low-cost black and white CCD camera is mounted behind the desk, close to the projector. Two near-infrared light sources are mounted adjacent to the projector and camera systems behind the desk. Illumination provided by the IR spot-lights is invisible to the human eye and does not interfere with the graphics projected on the desk's surface in any way. However, most black and white cameras can capture this near-IR light very well. A visible light blocking, IR-pass filter is placed in front of the lens of the camera which allows the IR vision system to be used regardless of what is displayed on the workbench.

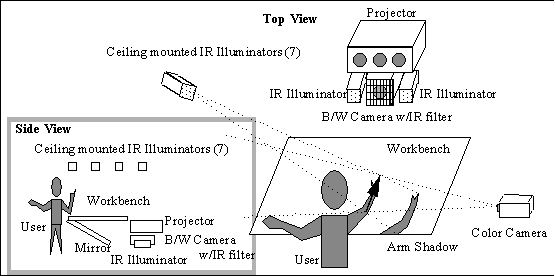

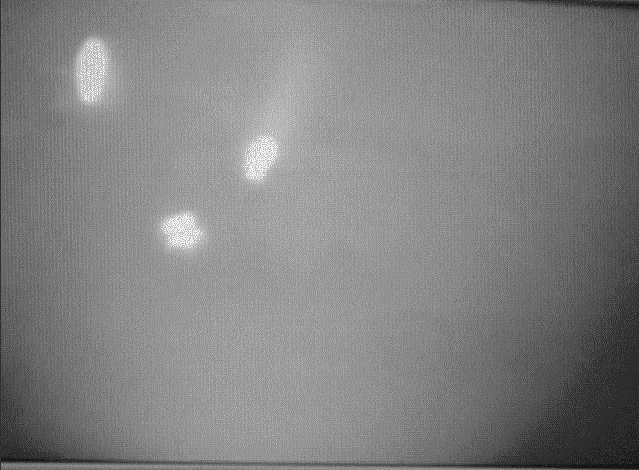

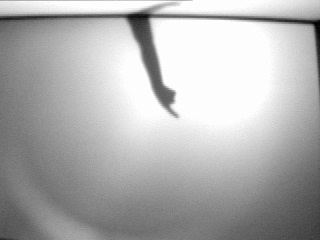

Objects close to the desk surface (including the user's hands) reflect the IR light and can be seen by the camera under the display surface (see Figures 2 and 3). Using a combination of intensity thresholding and background subtraction, we extract interesting regions of the camera image and analyze them. The resulting blobs are classified as different object types based on a set of features, including area, eccentricity, perimeter, moments, and the contour shape.

Figure 2: Infrared illuminated objects as seen by the camera beneath the workbench's surface.

Figure 3: Hands and objects as detected by the contour tracker.

Several difficulties arose from the hardware configuration. The foremost problem is that the two light-sources provide a very uneven lighting over the desk surface, bright in the middle, but getting weaker towards the borders. In addition, the light rays are not parallel, and the reflection on the mirror surface further exacerbates this effect. As a result, the perceived sizes and shapes of objects on the desk surface can vary depending on the position and orientation. Finally, when the user moves an object, the reflection from his/her hand can also add to the perceived shape. To counter these effects an additional stage in the recognition process matches recognized objects to objects known to be on the table. This stage filters out wrong classification or even complete loss of information about an object for several frames. Here, we use the object recognition and tracking capability mainly for ``cursor objects.'' Our focus is fast and accurate position tracking, but the system may be trained on a set of different objects to be used as navigational tools or physical icons [Ishii97].

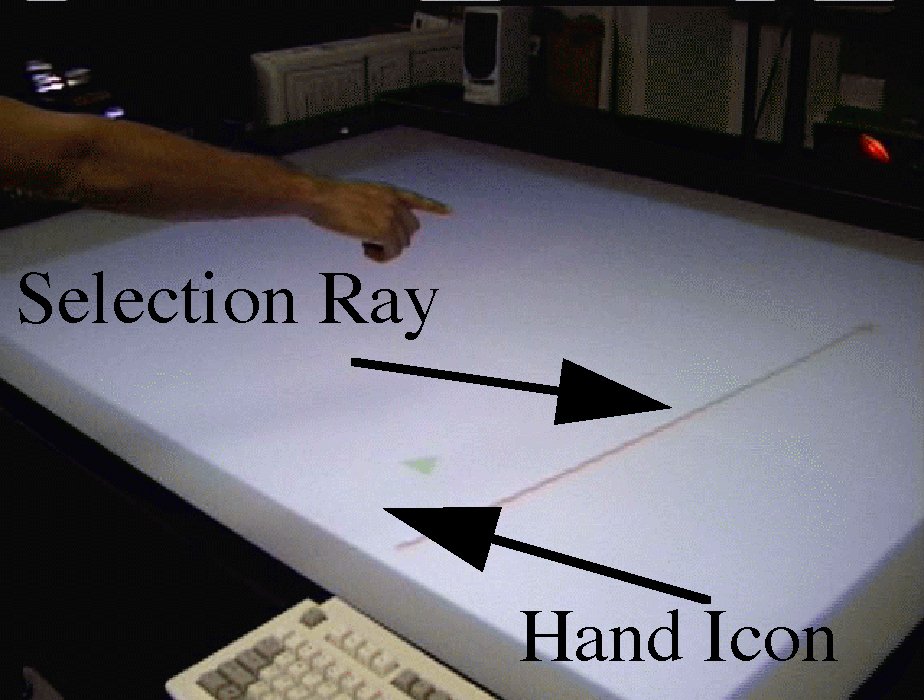

The Perceptive Workbench can recover 3D pointing gestures made by the user above the desk's surface (Figure 4). Such gestures are often useful to refer to objects rendered out of the user's reach on the surface of the desk. With vision-based tracking techniques, the first issue is to determine which information in the camera image is relevant, i.e. which regions represent the user's hand or arm. This task is made even more difficult by variation in user's clothing or skin color and by background activity. Although typically only one user interacts with the environment at a given time, the physical dimensions of large semi-immersive environments such as the workbench invite people to watch and participate. With the workbench, there are few places where a camera can be put to provide reliable hand position information. One camera can be set up next to the table without overly restricting the available space for users, but if a similar second camera were to be used at this location, either multi-user experience or accuracy would be compromised.

Figure 4: Side camera view of pointing gesture.

Figure 5: Shadow of pointing gesture.

Figure 6: Resulting vector derived from the two views.

We have addressed this problem by employing our shadow-based architecture (as described in the next section). The user stands in front of the workbench and extends an arm over the surface. One of the IR light-sources mounted on the ceiling to the left of, and slightly behind the user, shines its light on the desk surface, from where it can be seen by the IR camera under the projector (see Figure 2). When the user moves his/her arm over the desk, it casts a shadow on the desk surface (see Figure 5). From this shadow, and from the known light-source position, we can calculate a plane in which the user's arm must lie. Simultaneously, the second camera to the right of the table records a side view of the desk surface and the user's arm. It detects where the arm enters the image and the position of the fingertip. From this information, it extrapolates two lines in 3D space, on which the observed real-world points must lie. By intersecting these lines with the shadow plane, we get the coordinates of two 3D points, one on the upper arm, and one on the fingertip. This gives us the user's hand position, and the direction in which he/she is pointing. As shown in Figure 6, this information can be used to project a hand position icon and a selection ray in the workbench display. We must first recover arm direction and fingertip position from both the camera and the shadow image. Since the user is standing in front of the desk and user's arm is connected to the user's body, the arm's shadow should always touch the image border. Thus our algorithm exploits intensity thresholding and background subtraction to discover regions of change in the image and searches for areas where these touch the front border of the desk surface (which corresponds to the top border of the shadow image or the left border of the camera image). It then takes the middle of the touching area as an approximation for the origin of the arm. For simplicity we will call this point the "shoulder", although in most cases it is not. Tracing the contour of the shadow, the algorithm searches for the point that is farthest away from the shoulder and takes it as the fingertip. The line from the shoulder to the fingertip reveals the 2D direction of the arm.

In our experiments, the point thus obtained was coincident with the pointing fingertip in all but a few pathological cases (such as the fingertip pointing straight down at a right angle to the arm). The method does not depend on a pointing gesture, but also works for most other hand shapes, including but not restricted to, a flat horizontally or vertically held hand and a fist. These shapes may be distinguished by analyzing a small section of the side camera image and may be used to trigger specific gesture modes in the future. The computed arm direction is correct as long as the user's arm is not overly bent. In such cases, the algorithm still connected shoulder and fingertip, resulting in a direction somewhere between the direction of the arm and the one given by the hand. Although the absolute resulting pointing position did not match the position towards which the finger was pointing, it still managed to capture the trend of movement very well. Surprisingly, the technique is sensitive enough such that the user can stand at the desk with his/her arm extended over the surface and direct the pointer simply by moving his/her index finger, without arm movement.

Note that another approach would be to switch between several overhead IR spotlights to recover the user's arm position. This would eliminate the need for the side camera but limit the range of motion the user could have over the desk's surface (due to the angle of the cast shadows). In addition, the current switching speed of the spotlights would limit the frame rate.

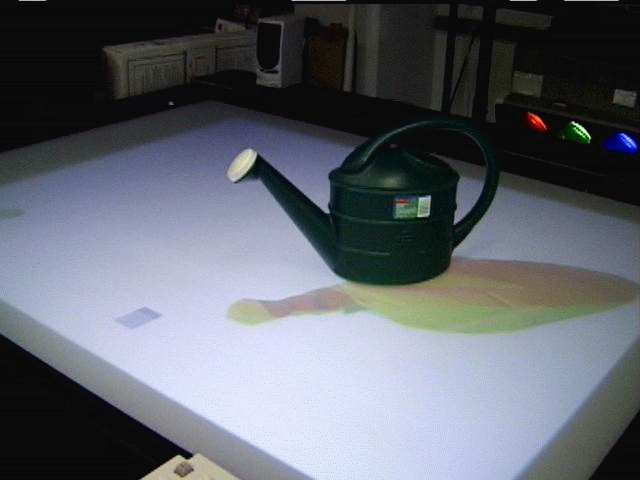

The vision system has been extended for three-dimensional reconstruction of the objects placed on the desk. This ability is desired for several reasons. First, not all objects reflect well in the infrared. While the bottom camera may see a change in intensity when an object is placed on the surface of the desk, the resulting blob may be darker than the normal, uncovered desk surface instead of lighter. Thus, another method is necessary for identification. Secondly, recovering a three-dimensional shape allows workbench users to introduce and share novel objects freely with mobile users. Given the stereoscopic capabilities of the workbench, such 3D object recovery may be a convenient and natural way to include physical objects in a virtual collaboration.

To facilitate the reconstruction process, a ring of 7 infrared light sources is mounted to the ceiling, each one of which can be switched independently by computer control. The camera under the desk records the sequence of shadows an object on the table casts when illuminated by the different lights. By approximating each shadow as a polygon (not necessarily convex)[Rosin95], we create a set of polyhedral "view cones", extending from the light source to the polygons. Intersecting these cones creates a polyhedron that roughly contains the object [Mantyla88].

Figure 7: 3D reconstruction of a watering can.

The Perceptive Workbench detects when a new object is placed on the desk surface using the methods above, and the user can initiate the reconstruction by touching a virtual button rendered on the screen The reconstruction process requires 1-3 seconds to complete. Figure 7 shows the reconstruction of a watering can placed on the desk. The process is fully automated and does not require any specialized hardware (i.e. stereo cameras, laser range finders, etc.). On the contrary, the method is extremely cheap, both in hardware and in computational cost. In addition, there is no need for extensive calibration, which is usually necessary in other approaches to recover the exact position or orientation of the object in relation to the camera. We only need to know the approximate position of the light-sources (+/- 2 cm), and we need to adjust the camera to the size of the display surface, which must be done only once. Neither the camera and light- sources nor the object are moved during the reconstruction process. Thus recalibration is unnecessary. We have substituted most mechanical moving parts, which are often prone to wear and imprecision, by a series of light beams from known locations. An obvious limitation for this approach is that we are, at the same time, confined to only a fixed number of different views from which to reconstruct the object. However, Sullivan's work [Sullivan98] and our experience with our system have shown that even for quite complex objects usually 7 to 9 different views are enough to get a reasonable 3D model of the object.

All vision processing is done on two SGI R10000 O2s (one for each camera), which communicate with a display client on an SGI Onyx RE2 via sockets. However, the vision algorithms could be run on one SGI with two digitizer boards or be implemented using semi-custom, inexpensive signal-processing hardware.

Both object and gesture tracking perform at a stable 12-18 frames per second. Frame rate depends on the number of objects on the table and the size of the shadows. Both techniques are able to follow fast motions and complicated trajectories. Latency is currently 0.25-0.33 seconds but has improved since last testing (the acceptable threshold is considered to be at around 0.1s). Surprisingly, this level of latency seems adequate for most pointing gestures. Since the user is provided with continuous feedback about his hand and pointing position and most navigation controls are relative rather than absolute, the user adapts his behavior readily to the system. With object tracking, the physical object itself can provide the user with adequate tactile feedback as the system catches up to the user's manipulations. In general, since the user is moving objects across a very large desk surface, the lag is noticeable but rarely troublesome in the current applications.

Even so, we expect simple improvements in the socket communication between the vision and rendering code and in the vision code itself to improve latency significantly. In addition, due to their architecture, the R10000-based SGI O2's are known to have a less direct video digitizing path than their R5000 counterparts. Thus, by switching to less expensive machines we expect to improve our latency figures.

To calculate the error from the 3D reconstruction process requires choosing known 3D models, performing the reconstruction process, aligning the reconstructed model and the ideal model, and calculating an error measure. For simplicity, a cone and pyramid were chosen. The centers of mass of the ideal and reconstructed models were set to the same point in space, and their principal axes were aligned.

To measure error, we used the Metro tool [Cignoni98]. It approximates the real distance between the two surfaces by choosing a set of (100K-200K) points on the reconstructed surface, and then calculates the two-sided distance (Hausdorff distance) between each of these points and the ideal surface. Mean error for the cone and pyramid averaged 5 millimeters (or 1.6%).

While improvements may be made by precisely calibrating the camera and lighting system, by adding more light sources, and by obtaining a silhouette from the side camera (to eliminate ambiguity about the top of the surface), the system meets its goal of providing virtual presences for physical objects in a quick and timely manner that encourages spontaneous interactions.

Figure 8: Mobile player HMD and camera system.

In order to maintain player mobility and the illusion of a self-contained wearable computer, we are experimenting with wireless audio and video transmission as in [Starner97]. Quality of transmitted video/audio significantly affected game play. We maintain that sufficient resources to run our game will eventually be commonly available, whether on the body, or processed in the environment.

We exploit the downward facing camera to recognize player hand gestures using templates or hidden Markov models. Our Markov modeling software is derived from code used in the first author's American Sign Language recognition system. This system could recognize and differentiate between 40 signs in real-time with over 97\% accuracy [Starner98]. The first version of WARPING uses feature template matching and the next will leverage HMMs. Features for either model of recognition are currently extracted by segmenting the player's hands with color and then distilling the parameters of their moments (area, bounding ellipse, etc).

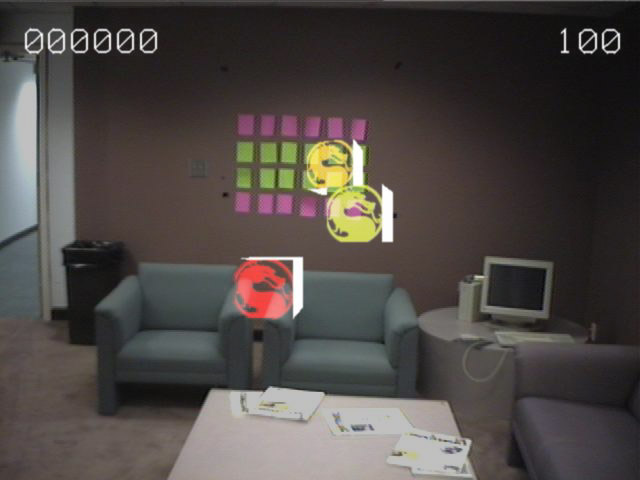

The forward looking camera approximates head rotation by visually tracking fiducials mounted on the walls of the room. In current WARPING implementations, the player is assumed to stay in relatively the same place, allowing the use of the head rotation information for rendering graphics that seem registered to the physical world. In the future, it is hoped that registration will be adequate to clip virtual creatures to physical objects in the room such as the coffee table in Figure 11.

The 3D graphical and sound component of the current WARPING system was created using the Simple Virtual Environment (SVE) toolkit. SVE is a programming library developed by the Georgia Institute of Technology Virtual Environments Group. Built upon OpenGL, the library supports the rapid implementation of interactive 3D worlds. SVE allows applications to selectively alter, enhance, or replace components such as user interactions, animations, rendering, and input device polling [Kessler97]. SVE applications can be compiled to run on both PC and SGI platforms. Silicon Graphics O2's are used as the hardware platform for the WARPING system. Depending on the desired functionality, 1-3 machines are used for the Perceptive Workbench and 1-2 machines for the mobile user.

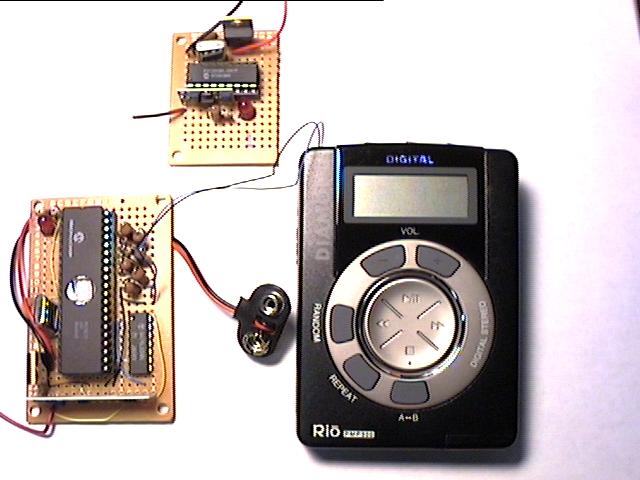

This section presents a much lighter weight and inexpensive infrastructure for WARPING augmented realities that uses a simple wearable computer and radio frequency (RF) beacons. Whereas the system above overlays graphics onto the user's environment, this system employs only audio. As a result, the required infrastructure is much less expensive and computationally complex than traditional augmented reality (AR) systems. This infrastructure is used to develop the game ``Guided by Voices'' below which allowed us to explore the unique issues involved in creating an engaging augmented reality audio environment.

Users of this system carry a simple wearable computer that receives location information from transmitters placed in the environment. To play contextually appropriate sounds, the current location is used in conjunction with the list of previously visited locations and the order in which they are visited.

The wearable computer consists of an off-the-shelf MPEG 1 Layer 3 (MP3) Diamond RioTM digital audio player, a Microchip PIC microcontroller, and a RF receiver. The PIC decodes information from the RF receiver into location information and controls the MP3 player, including selecting the correct track and starting and stopping the device at appropriate times. The location beacon consists of a PIC and RF transmitter. The beacon transmits a unique ID approximately once every second. The beacons are small, low power and inexpensive. We hope to environmentally power the beacons to avoid batteries and deploy them as an economical infrastructure for general use to gather location information [Starner97b].

The infrastructure can be configured in several ways that facilitate different forms of interaction between the users and the environment. By controlling the placement of transmitters and which devices record beacon IDs, various levels of privacy can be maintained.

In the Guided by Voices configuration, only the environment transmits IDs so all location information is local to each wearable. In theory, however, the wearable user could reveal himself/herself to the environmental infrastructure if such exposure would add desired functionality. Note that this retention of user control does not occur with the third option above.

While the above section framed the WARPING infrastructure, this section will present two game systems developed using WARPING. The first, MIND-WARPING, uses the mobile and desk-based platforms to create a multi-player shared augmented environment that is a cross between a fighting and an agent controlling game. The second system is a role-playing game similar in style to the early text-based adventure games.

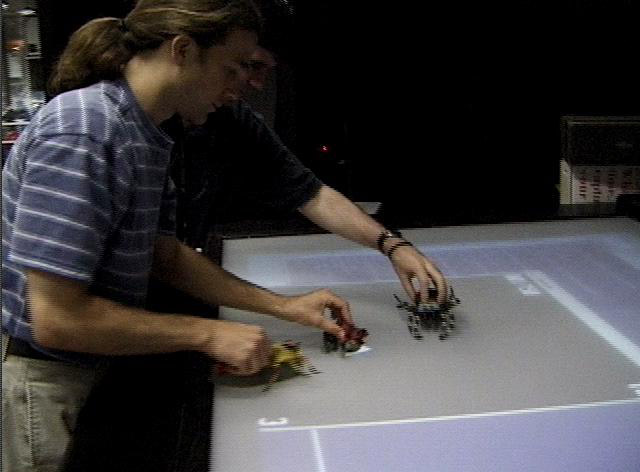

Figure 9: Two Game Moderators controlling monsters on the Perceptive Workbench.

Multiple-Invading Nefarious Demons (MIND) is our first game implementation in the WARPING system. The game pits a ``Kung Fu'' fighter against an evil magician who directs monsters through his magical desk. The fighter performs magical gestures and shouts to fend off the attacking demons. The following introduction is used to give the players the correct mental model of game play.

"As the eve of the millenium approaches, a little known sect by the name of Delpht prepares for a battle few have known. The last such battle between Delpht's champion and the evil spirit warlord Necron occurred in 999 A.D. Able to interact with the physical plane only once in a thousand years, and even then only through directing pawns from his spirit world, Necron has been biding his time. If Necron can guide enough of his spirits to the physical plane on Dec. 31, 1999, the resulting imbalance will allow the opening of a spiritual gate in downtown Atlanta through which Necron can pass to establish his evil empire. Unfortunately, Delpht's last champion has long since passed to the spiritual realm, and the sect's monks have lost many of their defensive spells to the vagaries of the oral tradition. However, through the miracle of modern science, researchers at Georgia Tech have been able to tune their augmented reality gear to visualize Necron's spirits as they try to pass to the physical realm through the slowly forming gate. Now, the monks are gathering together to channel their energy through their new champion, who will defend this realm with his arsenal of mystical martial arts. Meanwhile, Necron has perfected a magical desktop which allows him to guide his spirits with precision..."

Figure 10: Player forming attack gestures.

Figure 11: Player view composite.

When the mobile player puts on the head-up display, a status screen is visible which allows the user to prepare for the magician (workbench user) to start the next fight. The Perceptive Workbench provides the magician with an overhead view of the playfield while the fighter sees the graphics registered on the physical world from a first-person perspective. When the magician places one or more opponents (in this case, plastic insects - see Figure 9) on to the virtual gaming table, the player sees the opponents appear to be on one of 3 planes of attack: high, middle, and low. For MIND- WARPING, the plane of attack is indigenous to the type of attacking monster, for example: ants on the floor, spiders on the ceiling, flies in the middle etc. To effect attacks on the virtual opponents, the fighter performs one of 3 hand gestures in combination with an appropriate Kung Fu yell ("heee-YAH") to designate the form of the attack. Figure 10 demonstrates the gestures used in MIND-WARPING. The yell is a functional part of the recognition system, indicating the start and end of the gesture. Audio thresholding is sufficient to recognize the yell, though speech recognition technology may be employed for future games. Once the correct combined gesture is performed, the corresponding monster is destroyed, making its death sound and falling from the playfield. However, if the magician is successful in navigating a monster so that it touches the player, the player loses health points and the monster is destroyed. Figure 11 shows the view from the player's perspective.

While the system is used mostly by the authors and graduate students in the same laboratory, we have found that the experience is surpringly compelling and balanced. The games master who controls the attacking spirit bugs has a difficult time keeping pace with the player, which is opposite of what is expected. In fact, two games masters sometimes team to attack the player throught the desk interface. With four hands controlling monsters on the workdesk, the player often loses. Meanwhile, the player's experience is somewhat unique. He sees and hears the monsters overlaid on the physical room (the spirit gate) and responds with sharp gestures and yells. This multi-modal experience seems to engage the player quickly.

The setting of Guided by Voices is a medieval fantasy world, where the player wanders through a soundscape of trolls, dragons, and wizards. The player passes through different settings such as a dragon's cave and a troll's bridge meeting friends or foes and gathering objects like a sword and a spellbook. The game play is similar to traditional role-playing games where the player is a character on a quest. The player does not directly interact with computers or graphics. Instead, the wearable observes the player's actions and functions as the game master. All of the game's input comes from where the player chooses to walk in the real world environment. The narrative of the story changes depending on the paths of the players. The wearable keeps track of the player's state checking if he/she has acquired the necessary objects for a specific location (i.e., if you enter the dragon's cave without a sword it will kill you). Rich environments can be created because each location can serve multiple purposes and play different roles in different situations.

One could look upon this audio-only environment as being less immersive than a traditional computer game with graphics. However, we see several advantages to the Guided by Voices game play. For one, instead of moving an avatar in the computer world the player physically moves through a real world space. This aids in immersing the player in the game play. Also, many people can play this game simultaneously in the same physical space creating a real life social aspect to the game. Unlike most multi-player PC based video games, Guided by Voices brings people together to the same geographical location. The game could be extended easily to capitalize and enhance this social interaction. The audio-only nature of the game takes advantage of the player's imagination drawing them further into the game. For example, instead of being presented with a rendered dragon that may or may not be frightening, the player gets to interact with his/her idea of what a frightening dragon should look like. The sound elements of the game are designed to aid this imagination process.

Figure 12: MP3 player and transmitter/receiver pair.

There were several challenges to be overcome by the sound design for this game. Our first goal in the sound design was to address the key issues of creating a narrative audio space. This involves answering the questions: Where am I? What is going on? How does it feel? What happens next [Back96]? When creating the sounds for this environment "real" sounds were not appropriate. It is not enough to simply record a sword being drawn from a sheath. Instead, a sound effect must match the listener's mental model of what a sword should sound like. This is especially important in this game which lacks any visual cues. It is known that if a sound effect does not match the player's mental model, no matter how "real", he/she will not be able to recognize it [Mynatt94]. Therefore, creation of a caricature of the sound is important [Back96]. The setting of this game is also in a fantasy world so there were seldom "real" sounds available. Instead we tapped into the cultural conditioning of the player to make the narrative clear. Another design challenge lay in the fact that the game is a nonlinear narrative. This could cause for a weakening of artistic control due to the fact that the user is in control of the narrative flow [Back96]. Special care was focused on content design so the story's elements would be consistent throughout the various paths of the game. A player's actions weave small narrative elements (sound events) together to form a larger story.

An additional potential obstacle to audio design is that this game might be played in a noisy environment through which the player is trying to navigate at the same time as play the game. The sounds are therefore especially clear and exaggerated, and all speech is well enunciated. Each sound event has three layers:

We feel that for the game to be immersive, entertaining, clear, and not frustrating all of these elements are necessary.

This paper has discussed the potentials for the application of wearable computing and augmented reality to computer gaming. We have demonstrated WARPING, a developing test-bed for augmented reality gaming, and have shown two game implementations which engage multiple players in several different roles. In addition, we have demonstrated how computer vision techniques may be used to create a more integrated interface between the virtual and physical worlds.

[Agre87] Agre, P. & Chapman, D. "Pengi: An implementation of a theory of activity." In Proceedings of the Sixth National Conference on Artificial Intelligence (pp. 268--272), 1987

[Back96] M. Back and D. Des. "Micro-Narratives in Sound Design: Context, Character, and Caricature." Proc. of ICAD, 1996

[Blumberg94] B. Blumberg. "Action-selection in Hamsterdam: Lessons from Ethology." In From Animals to Animats: Proc of the Third Intl Conf on the Simulation of Adaptative Behaviour, 1994.

[Bruckman97] A. Bruckman. "MOOSE Crossing: Construction, Community, and Learning in a Networked Virtual World for Kids." PhD Dissertation, MIT Media Lab, May 1997.

[Cignoni98] P. Cignoni, C. Rocchini, R. Scopigno. "Metro: Measuring Error on Simplified Surfaces." Computer Graphics Forum, Blackwell Publishers, vol. 17(2), June 1998, pp. 167-174.

[Colella98] V. Colella, R. Borovoy, and M. Resnick. "Participatory Simulations: Using Computational Objects to Learn about Dynamic Systems." Proceedings of the CHI '98 conference, Los Angeles, April 1998.

[Evard93] R. Evard "Collaborative Networked Communication: MUDs as Systems Tools," Proceedings of the Seventh Systems Administration Conference (LISA VII), USENIX Monterey CA, November 1993.

[Foner97] L. Foner. "Entertaining Agents: A Sociological Case Study." The Proceedings of the First International Conference on Autonomous Agents, 1997.

[Healey98] J. Healey, R. Picard, and F. Dabek. "A New Affect-Perceiving Interface and Its Application to Personalized Music Selection." Proceedings of the 1998 Workshop on Perceptual User Interfaces, San Fransisco, CA, November 4-6, 1998

[Ishii97] H. Ishii and B. Ullmer. "Tangible Bits." CHI 1999, pp 234-241.

[Jain95] R. Jain, R. Kasture, and B. Schunck. "Machine Vision." McGraw-Hill, New York 1995.

[Johnson99] M.P. Johnson, A. Wilson, B. Blumberg, C. Kline, and A. Bobick. "Sympathethic Interfaces: Using a Plush Toy to Direct Synthetic Characters." In Proceedings of CHI 99.

[Kessler97] Drew Kessler, Rob Kooper, and Larry Hodges. "The Simple Virtual

Environment Libary User=B4s Guide Version 2.0" Graphics, Visualization,

and Usability Center, Georgia Institute of Technology, 1997.

User's Guide Available online at:

http://www.cc.gatech.edu/gvu/virtual/SVE/

[Kline99] C. Kline and B. Blumberg. "The Art and Science of Synthetic Character Design." Proceedings of the AISB Symposium on AI and Creativity in Entertainment and Visual Art, Edinburgh, Scotland, 1999.

[Koller97] D. Koller, G. Klinker, E. Rose, D. Breen, R. Whitaker, and M. Tuceryan. "Real-time Vision-Based Camera Tracking for Augmented Reality Applications." In Proceedings of the ACM Symposium on Virtual Reality Software and Technology (VRST-97), Lausanne, Switzerland, September 15-17, 1997, pp. 87-94.

[Kruger95] W. Kruger, C. Bohn, B. Frohlich, H. Schuth, W. Strauss, G. Wesch. "The Responsive Workbench: A Virutal Work Environment." IEEE Computer 28(7), July 1995, pp. 42-48

[Leibe2000] B. Leibe, T. Starner, W. Ribarsky, D. Krum, B. Singletary, and L. Hodges. "The Perceptive Workbench: Towards Spontaneous and Natural Interaction in Semi-Immersive Virtual Environments." To appear, Proc. of IEEE VR2000, March 2000.

[Mantyla88] M. Mantyla, "An Introduction to Solid Modeling", Computer Science Press, 1988

[Mynatt94] E. Mynatt. "Designing with Auditory Icons." Proc. of ICAD, 1994.

[Rosin95] Paul L. Rosin, Geoff A.W. West, "Nonparametric Segmentation of Curves into Various Representations" IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 17, No 12, December 1995

[Starner97] T. Starner, S. Mann, B. Rhodes, J. Levine, J. Healey, D. Kirsch, R. Picard,and A. Pentland. "Augmented Reality Through Wearable Computing." Presence 6(4). Winter, 1997.

[Starner98] T. Starner, J. Weaver, and A. Pentland. "Real-Time American Sign Language Recognition Using Desk and Wearable Computer-Based Video." IEEE PAMI 20(12), December 1998.

[Sullivan98] S. Sullivan, J. Ponce, "Automatic Model Construction, Pose Estimation, and Object Recognition from Photographs Using Triangular Splines." IEEE PAMI 1998

[Ullmer97] B. Ullmer and H. Ishii. "The metaDESK: Models and Prototypes for Tangible User Interfaces." UIST, 1997

[Want95] R. Want, W. Schilit, N. Adams, R. Gold, K. Petersen, D. Goldberg, J. Ellis, M. Weiser. "An overview of the PARCTAB ubiquitous computing experiment." IEEE Personal Communications 2(6), December 1995.

[Wren95] C. Wren, F. Sparacino, A. Azarbayejani, T. Darrell, T. Starner, A. Kotani, C. Chao, M. Hlavac, K. Russell, A. Pentland. "Perceptive Spaces for Performance and Entertainment: Untethered Interaction Using Computer Vision and Audition", Applied Artificial Intelligence Journal, 1995

[Wren99] C. Wren. "Understanding Expressing Action." Perceptual Computing Technical Report #498. 1999.